Overview

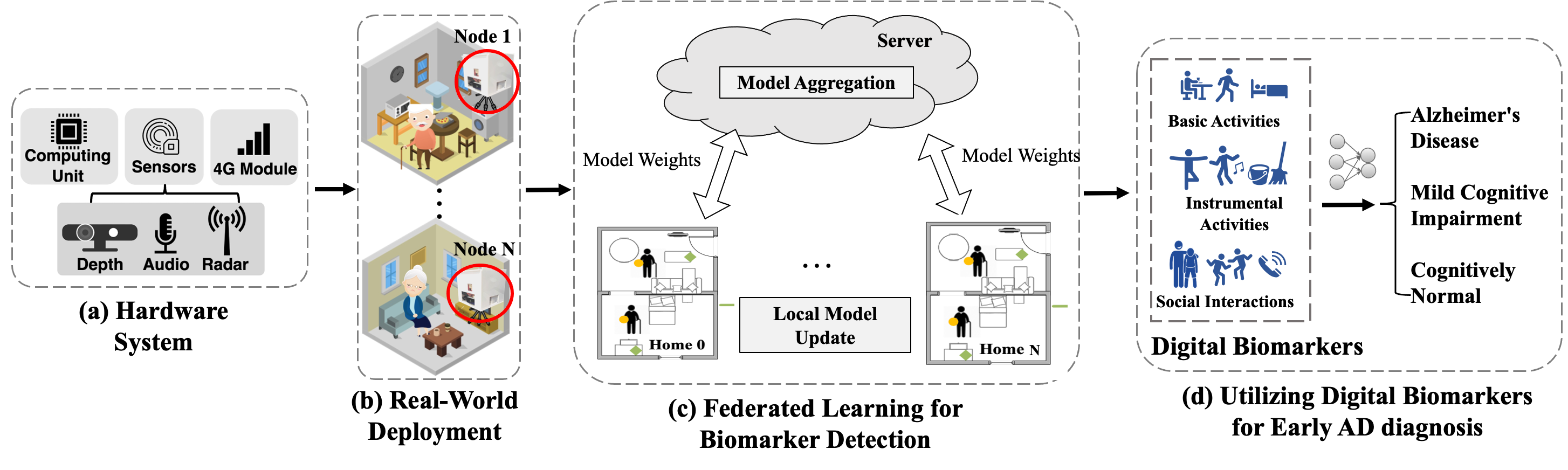

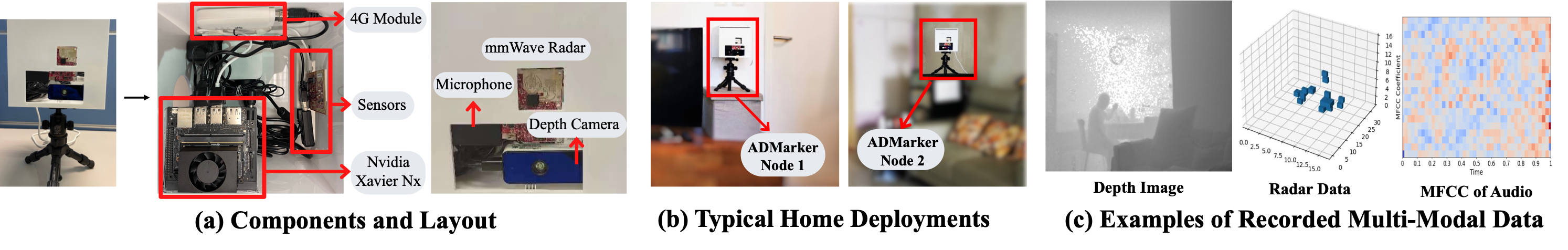

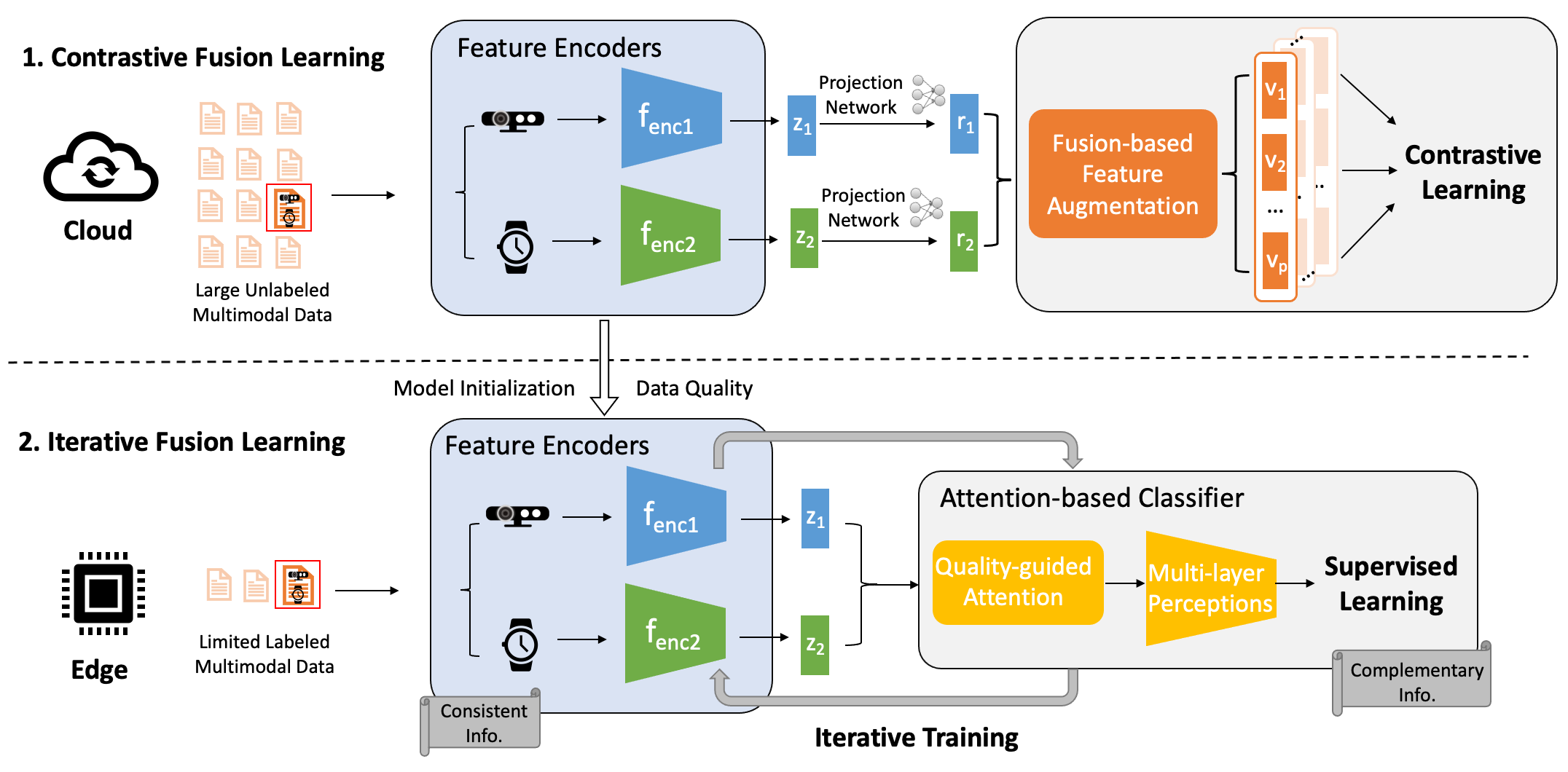

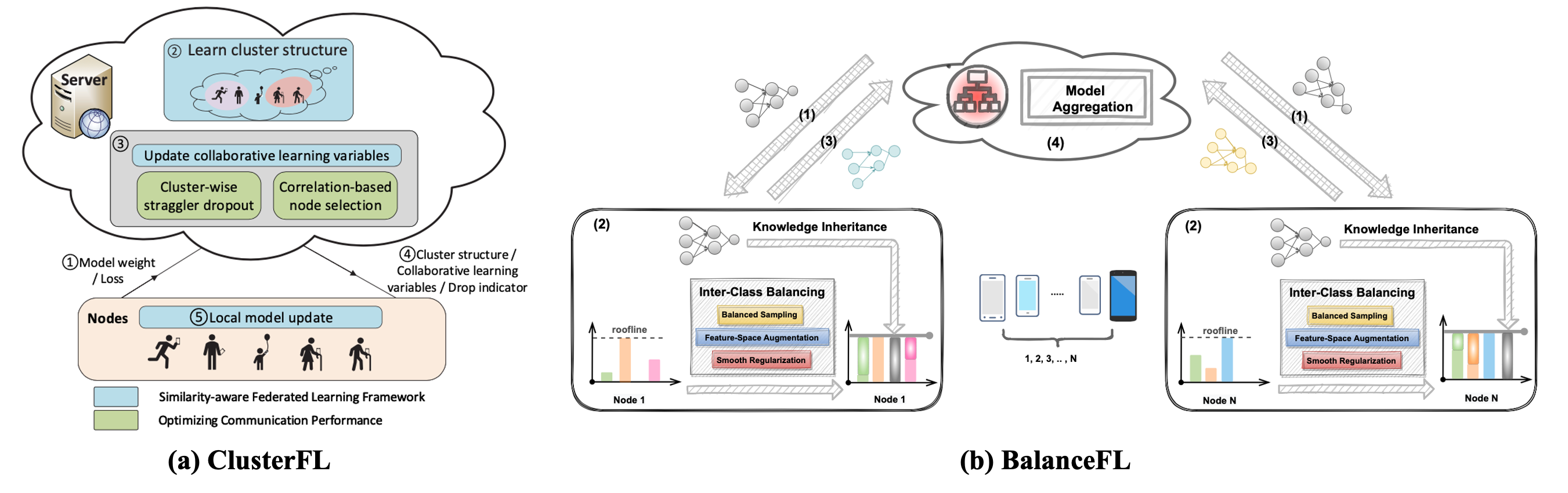

Alzheimer’s Disease (AD) and related dementia are a growing global health challenge due to the aging population. A major barrier to the treatment of AD is that many patients are either not diagnosed or diagnosed at the late stages of the disease. A recent major advance in early AD diagnosis and intervention is to leverage AI and sensor devices to capture physiological, behavioral, and lifestyle symptoms of AD (e.g., activities of daily living and social interactions) in natural home environments, referred to as digital biomarkers. In this project, we propose the first end-to-end system that integrates multi-modal sensors and federated learning algorithms for detecting multidimensional AD digital biomarkers in natural living environments. We develop a compact multi-modality hardware system that can function for up to months in home environments to detect digital biomarkers of AD. On top of the hardware system, we design a multi-modal federated learning system that can accurately detect more than 20 digital biomarkers in a real-time and privacy-preserving manner. Our approach collectively addresses several major real-world challenges, such as limited data labels, data heterogeneity, and limited computing resources.

Patient Recruitment and Results: To date, our system has been deployed in a four-week clinical trial involving 91 elderly participants (43 females and 48 males, 61 - 93 years old). The participants were from three groups: 31 with Alzheimer’s Disease, 30 with mild cognitive impairment (MCI), and 30 are cognitively normal. The results indicate that our system can accurately detect a comprehensive set of digital biomarkers with up to 93.8% accuracy and identify AD with an average of 88.9% accuracy. Our system offers a new platform that can allow AD clinicians to characterize and track the complex correlation between multidimensional interpretable digital biomarkers, demographic factors of patients, and AD diagnosis in a longitudinal manner.

Ethics: All the data collection in this study was approved by the Institutional Review Board of CUHK, and Clinical Research Ethics Committee of Joint CUHK and Hong Kong Hospital Authority (New Territories East Cluster).